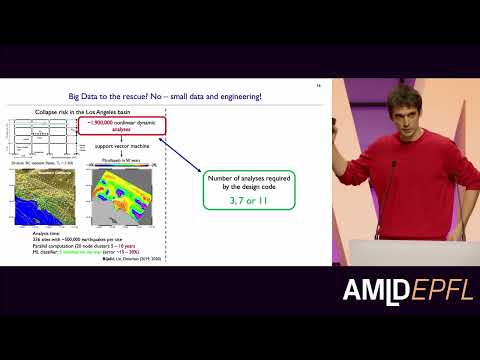

Availability of large amounts of data to guide predictive models has caused a renaissance in many aspects of human endeavor including computer science and medicine. Yet, one of the facts of life in the natural hazards engineering and structural engineering domains is that we typically do not have "big data". On one hand there is the scarcity of historic and high-quality data, while on the other hand, many of the physics-based approaches for data simulation tend to be prohibitively computationally expensive. On the flip side, we do typically have or can generate "small data" both through numerical as well as physical testing. Shying away from this fact is a missed opportunity, especially given the existing theoretical knowledge. In this talk I outline a "data-centric" path to efficient and robust seismic collapse risk quantification. Specifically, a first-ever domain specific data augmentation approach is showcased through a case study. In essence, this approach enables transformation of "small data" into "good, big data", effectively allowing data-driven surrogates to achieve "big data"-type of performance without requiring big data.

Download the slides for this talk.Download ( PDF, 8843.76 MB)