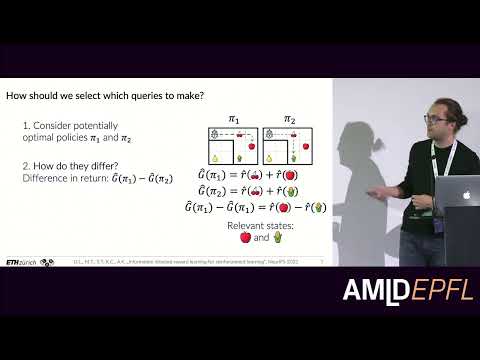

Reinforcement learning (RL) typically assumes a well-specified reward function to be available, but this is rarely the case in practical settings. Instead, this talk will discuss how RL agents can learn what to do from human feedback if no standard reward signal is available. In particular, we focus on learning robust representations of tasks in a sample-efficient way.

Download the slides for this talk.Download ( PDF, 4388.48 MB)