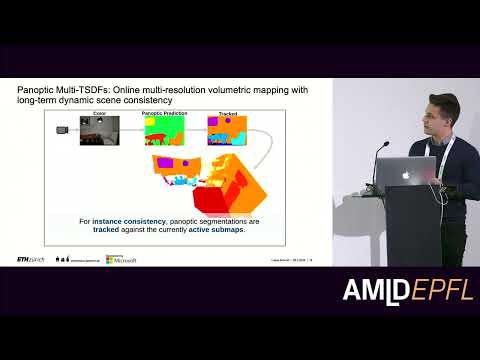

Volumetric maps are a crucial tool for robot interaction tasks. In practice, robots are oftentimes subject to tracking errors and long-term scene changes, which the map representation needs to account for. To this end, we present two volumetric map representations. First, we present a method that incrementally fuses neural implicit surface representations directly in latent space, showing improved robustness to camera tracking errors. Second, we demonstrate how high-level semantic information can be leveraged to incorporate long-term scene changes into a multi-resolution volumetric map during robot operation

Download the slides for this talk.Download ( PDF, 38934.98 MB)