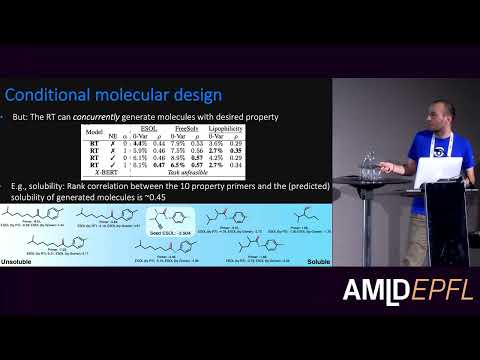

Despite the rise of deep generative models in molecular and protein design, current approaches develop property predictors and generative models independently from another. This conflicts with the intuitive expectation that a powerful property-driven generative model should, in the first place, excel at recognizing and understanding the property of interest. To facilitate this characteristic, we present the Regression Transformer (RT), a method that abstracts regression as a conditional sequence modeling problem. This yields a dichotomous model that can seamlessly transition between solving regression tasks and conditional generation tasks. While the RT is a generic, domain-independent methodology, we verify its utility on tasks in chemical and protein language modeling. The RT can surpass traditional regression models in molecular property prediction tasks despite being trained with cross entropy loss. Importantly, the same model proves to be a powerful conditional generative model and outperforms specialized approaches in a constrained molecular property optimization benchmark. In sum, our experiments underline the dichotomy of the RT: A single model that excels in property prediction as well as structure-constrained conditional generation. The Regression Transformer opens the door for ”swiss army knife” models that excel at both regression and conditional generation, which finds application particularly in property-driven, local exploration of the chemical or protein space.

Download the slides for this talk.Download ( PDF, 1907.44 MB)